markov chain calculator|absorbing markov chain calculator : Tuguegarao Usually, the probability vector after one step will not be the same as the probability vector after two steps. But many times after several steps, the . Tingnan ang higit pa Data Pengeluaran Toto Macau 5D. Rekap Data keluaran macau 2024 di atas dapat anda akses secara gratis, dan sobat bisa lihat keluaran togel TTM secara cepat dan tepat sesuai dengan waktu keluaran togel yang ditentukan oleh web pusat. Nomor yang kami tayangkan sudah Sah keaslinnya. Semoga data keluaran macau 2022 yang kami .The latest Tweets from CMU PRISM (@cmuprism). Gender & Sexuality Alliance (GSA) at Carnegie Mellon University. Pittsburgh, PA

PH0 · transition matrix markov chain calculator

PH1 · markov matrix calculator

PH2 · markov chains for dummies

PH3 · markov chain steady state calculator

PH4 · markov chain stable vector calculator

PH5 · markov chain generator

PH6 · markov chain calculator with steps

PH7 · absorbing markov chain calculator

PH8 · Iba pa

ONE-iAdvantage with space of 20,000 sq.ft offers customers flawless and high-speed Internet connections. Designed and built in alignment with our server colocation principles of reliability, security and scalability, deploying a network of multiple-fiber optics and direct ground-level risers.

markov chain calculator*******Calculate the nth step probability vector and the steady-state vector for a Markov chain with any number of states and steps. Enter the transition matrix, the initial state, and the number of decimal places, and see the results and the formula steps. Tingnan ang higit paMarkov chain calculator and steady state vector calculator. Calculates the nth step probability vector, the steady-state vector, the absorbing . Tingnan ang higit paThe probability vector shows the probability to be in each state. The sum of all the elements in the probability vector is one. The nth step probability . Tingnan ang higit pa

Usually, the probability vector after one step will not be the same as the probability vector after two steps. But many times after several steps, the . Tingnan ang higit paUsually, the probability vector after one step will not be the same as the probability vector after two steps. But many times after several steps, the . Tingnan ang higit pa

markov chain calculator absorbing markov chain calculatorUse this online tool to input data and calculate transition probabilities, state vectors, and limiting distributions for Markov Chains. Learn the basics of Markov Chains, their .

Enter a transition matrix and an initial state vector to run a Markov Chain process. Learn the formula, concepts and examples of Markov chains and matrices.Calculator for Finite Markov Chain Stationary Distribution (Riya Danait, 2020) Input probability matrix P (P ij, transition probability from i to j.). Takes space separated input:markov chain. Have a question about using Wolfram|Alpha? Compute answers using Wolfram's breakthrough technology & knowledgebase, relied on by millions of students .Learn how to create and run a Markov chain, a probabilistic model of state transitions. Use the matrix, graph and examples to explore different scenarios and probabilities. Write transition matrices for Markov Chain problems. Use the transition matrix and the initial state vector to find the state vector that gives the distribution after a .

Calculator for finite Markov chain. ( by FUKUDA Hiroshi, 2004.10.12) Input probability matrix P (P ij, transition probability from i to j.): 0.6 0.4 0.3 0.7. probability vector in stable . A Markov chain is collection of random variables {X_t} (where the index t runs through 0, 1, .) having the property that, given the present, the future is conditionally . Markov Chain Calculator. This calculator provides the calculation of the state vector of a Markov chain for a given time step. Explanation. Calculation Example: . A Markov chain is collection of random variables {X_t} (where the index t runs through 0, 1, .) having the property that, given the present, the future is conditionally independent of the past. In other words, If a Markov sequence of random variates X_n take the discrete values a_1, ., a_N, then and the sequence x_n is called a Markov chain .Explore math with our beautiful, free online graphing calculator. Graph functions, plot points, visualize algebraic equations, add sliders, animate graphs, and more. Markov chain matrix | Desmos

A Markov chain is a stochastic model that uses mathematics to predict the probability of a sequence of events occurring based on the most recent event. A common example of a Markov .

The power to raise a number. formula. a fact or a rule written with mathematical symbols. A concise way of expressing information symbolically. markov chain. a stochastic model describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event. matrix.

Equations Inequalities Scientific Calculator Scientific Notation Arithmetics Complex Numbers Polar/Cartesian Simultaneous Equations System of Inequalities Polynomials Rationales Functions Arithmetic & Comp. Coordinate Geometry Plane Geometry . gram-schmidt-calculator. markov chain. en. Related Symbolab blog posts. Advanced Math . Fortunately, we don’t have to examine too many powers of the transition matrix T to determine if a Markov chain is regular; we use technology, calculators or computers, to do the calculations. There is a theorem that says that if an \(n \times n\) transition matrix represents \(n\) states, then we need only examine powers T m up to .

Markov Chain Calculator. This calculator provides the calculation of the state vector of a Markov chain for a given time step. Explanation. Calculation Example: A Markov chain is a stochastic process that describes a sequence of events in which the probability of each event depends only on the state of the system at the previous event. .Equations Inequalities Scientific Calculator Scientific Notation Arithmetics Complex Numbers Polar/Cartesian Simultaneous Equations System of Inequalities Polynomials Rationales Functions Arithmetic & Comp. Coordinate Geometry Plane Geometry Solid Geometry . markov chain. en. Related Symbolab blog posts. Practice, practice, . Markov Chains Calculator. This calculator provides the calculation of the state distribution of a Markov chain after n steps. Explanation. Calculation Example: A Markov chain is a stochastic process that describes a sequence of events in which the probability of each event depends only on the state of the system at the previous event. .A Markov chain is a random process with the Markov property. A random process or often called stochastic property is a mathematical object defined as a collection of random variables. A Markov chain has either discrete state space (set of possible values of the random variables) or discrete index set (often representing time) - given the fact .

Markov Chain Calculator Help What’s it for? Techniques exist for determining the long run behaviour of markov chains. Transition graph analysis can reveal the recurrent classes, matrix calculations can determine stationary distributions for those classes and various theorems involving periodicity will reveal whether those stationary .markov chain calculator There is actually a very simple way to calculate it. This can be determined by calculating the value of entry (A, ) of the matrix obtained by raising the transition matrix to the power of N. . Types of .

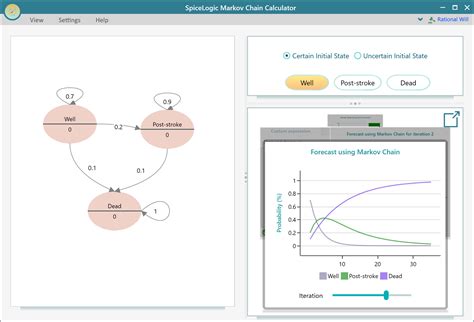

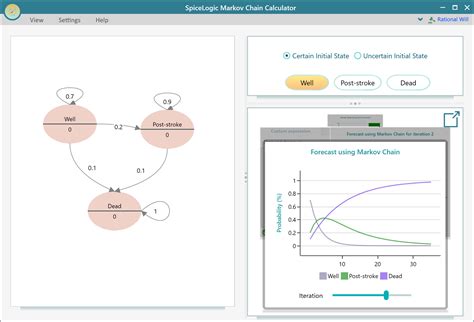

This Markov Chain Calculator lets you model a Markov chain with States but no rewards can be attached to a state. If you want to attach Utility or Reward to a state and then if you want to calculate the .

Markov Chain Molecular Descriptors (MCDs) have been largely used to solve Cheminformatics problems. There are different types of Markov chain descriptors such as Markov-Shannon entropies (Shk), Markov Means (Mk), Markov Moments (πk), etc. However, there are other possible MCDs that have not been used before. In addition, .Markov Chains are also perfect material for the final chapter, since they bridge the theoretical world that we’ve discussed and the world of applied statistics (Markov methods are becoming increasingly popular in nearly every discipline). . To calculate the eigenvectors of the transpose of \(Q\), use the code eigen(t(Q)) in R. Problems. 10.1

Markov chains illustrate many of the important ideas of stochastic processes in an elementary setting. This classical subject is still very much alive, with important developments in both theory and applications coming at an accelerating pace in recent decades. 1.1 Specifying and simulating a Markov chain What is a Markov chain∗? A Markov chain is an absorbing Markov chain if it has at least one absorbing state. A state i is an absorbing state if once the system reaches state i, it stays in that state; that is, \(p_{ii} = 1\). If a transition matrix T for an absorbing Markov chain is raised to higher powers, it reaches an absorbing state called the solution matrix and .By Victor Powell. with text by Lewis Lehe. Markov chains, named after Andrey Markov, are mathematical systems that hop from one "state" (a situation or set of values) to another.For example, if you made a Markov chain model of a baby's behavior, you might include "playing," "eating", "sleeping," and "crying" as states, which together with other .

Welcome TO 11XPLAY 11Xplay is an online fantasy sports and online game ID provider platform, founded in 2010. Read More About Us 11XPLAY 11xplay is an online fantasy sports and online game ID provider platform , founded in 2008. Established in Chandigarh, India by Rajat Wahi, 11xplay quickly rose to become one of the leading online betting ID .

markov chain calculator|absorbing markov chain calculator